Directory Monitor

Problem

I have a 3 TB hard drive that contains all of our family's files such as photos, home movies, documents, software, etc. These are files we need to retain for a long time, if not forever. That drive is backed up to the cloud by Carbonite.

The problem is Carbonite does not automatically backup all files, they restrict files based on file type. For those files you need to manually tell Carbonite to back them up. Carbonite will also only retain backups of deleted files for 30 days. There are two issues, one, if the hard drive were to fail, the Carbonite backup would not contain all of the files that were on the drive. And second, if someone or something (virus, ransomware, etc.) modifies or deletes a file on the drive the backup of the original file would be lost after 30 days. The chances of noticing the file change within 30 days is slim if not impossible. This will result in in the permanant loss of valuable, irreplaceable data with no chance of recovery.

Solution

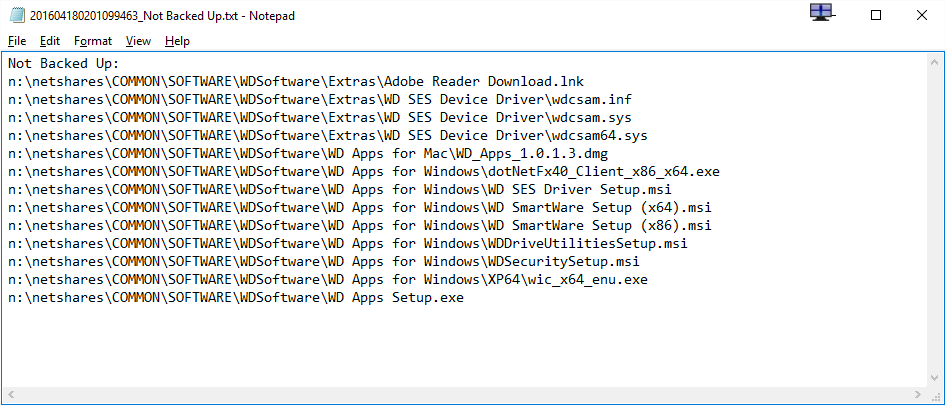

I created a program that runs daily which scans a defined directory and all subdirectories to report if any files were added, updated (file size or modified date) or deleted. It also checks to see if the file has been backed up by Carbonite. The program will send an email with a summary and attachments (if applicable) that contain a list of impacted files. This allows for flagging any unbacked up files in Carbonite for inclusion in the backup and for recovery of any files.

Technologies

- Visual Studio

- C#

- SQL Server Express

Sample daily email notification on smart phone:

Email detail (a file is attached for each category where the count is greater than zero):

Sample file attachment content: